Credit: Photo by Carl Wang on Unsplash

Credit: Photo by Carl Wang on Unsplash

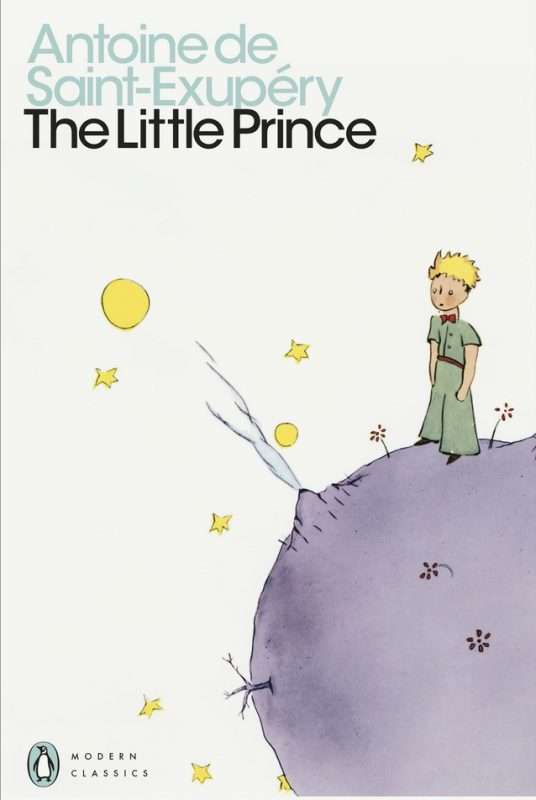

Anyone familiar with The Little Prince by French writer and aviator Antoine de Saint-Exupéry will hopefully have had a chance to see Spare Parts Puppet Theatre’s latest production of this timeless story; a show which invites you to explore the world with fresh eyes.

One of the central themes in the original work is the concept of ‘serious matters’; in particular of the difference between the priorities of adults and children. If you are familiar with the The Little Prince, you will recall Drawing Number One which allows the child narrator to test adults he meets. To most adults, the drawing looks like your favourite Akubra hat, yet the drawing is actually of a boa constrictor who had swallowed an elephant.

There are some ‘serious matters’ going on around the world at the moment, and it is difficult to know how to take stock. As the little prince explains to the castaway, ‘what is essential is invisible to the eye’.

Also, some less serious matters.

A few weeks ago, Chat GPT created a new image generator, in the nostalgic style of Studio Ghibli and other Japanese anime. So many humans around the world tried it out that Chat GPT briefly started ‘melting’ in the words of Open AI founder Sam Altman, due to a server crash. More recently, the latest craze is to create your own Barbie or action figure in a box.

Source: https://www.youtube.com/watch?v=1Uofhiz05yg]

Source: https://www.youtube.com/watch?v=1Uofhiz05yg]

Whilst these fads will die down in a twinkling, how many of us recognise that these moments of fun are part of a larger environmental issue?

AI is accelerating climate change.

Long gone are the days when communication from a distance happened by letter or land line and we knew how much a single phone call cost, and postage rates were common knowledge. We are now able to track our emissions if we fly. But how many of us considers how our use of technology may be using energy and increasing emissions?

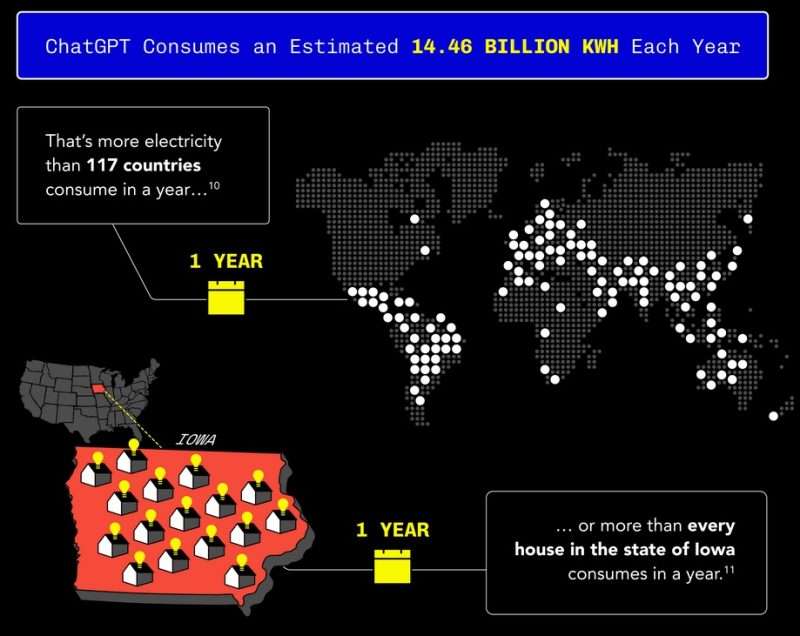

I haven’t been able to find any information on image generation, but according to a recent study by The Washington Post and the University of California, using ChatGPT-4 to generate a 100-word email uses 0.14 kilowatt-hours (KWH). This is equal to powering 14 LED light bulbs for an hour. Based on that study, Business Energy UK has calculated that in one year:

Source: Business Energy UK

Source: Business Energy UK

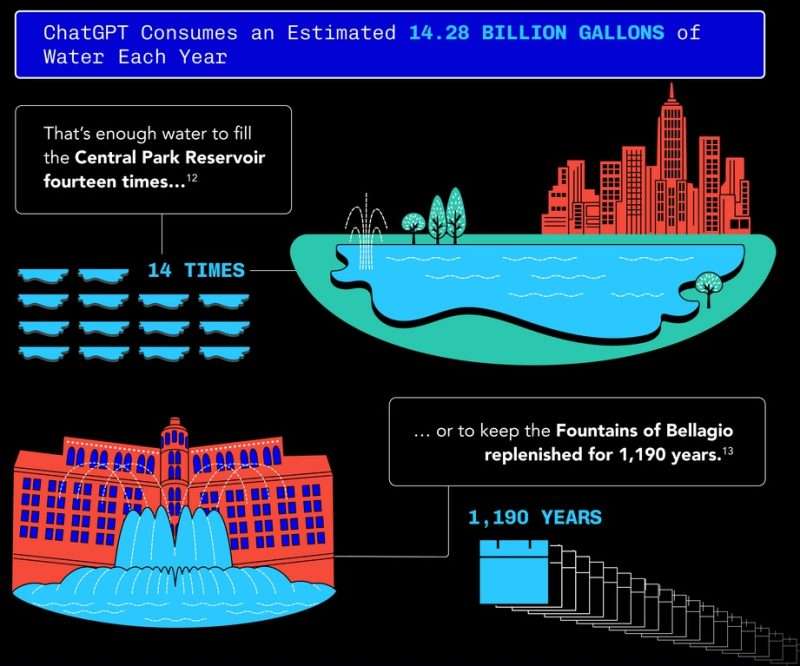

Any prompt to ChatGPT or other AI models to generate an image, or carry out some research or write an email starts a process which not only uses vast amounts of energy, but also often uses water systems to help cool the servers and prevent them overheating. The same Washington Post/University of California study found that for a 100-word email, it drains 519 millilitres of water. Based on this study, Business Energy UK also calculated that in one year:

Source: Business Energy UK

Source: Business Energy UK

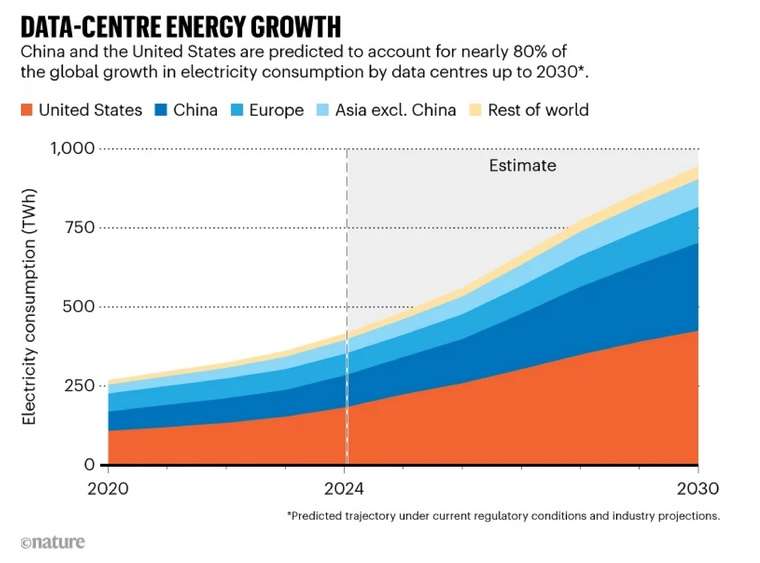

There is also the energy consumption of the data centres which host the servers for Chat GPT as well as other AI and cloud storage systems. According to a report from the International Energy Agency published on 10 April 2025 (IEA Report), the electricity consumption of data centres is projected to more than double by 2030. And the primary culprit for this increase is AI.

Source: International Energy Agency (CC BY 4.0)

Source: International Energy Agency (CC BY 4.0)

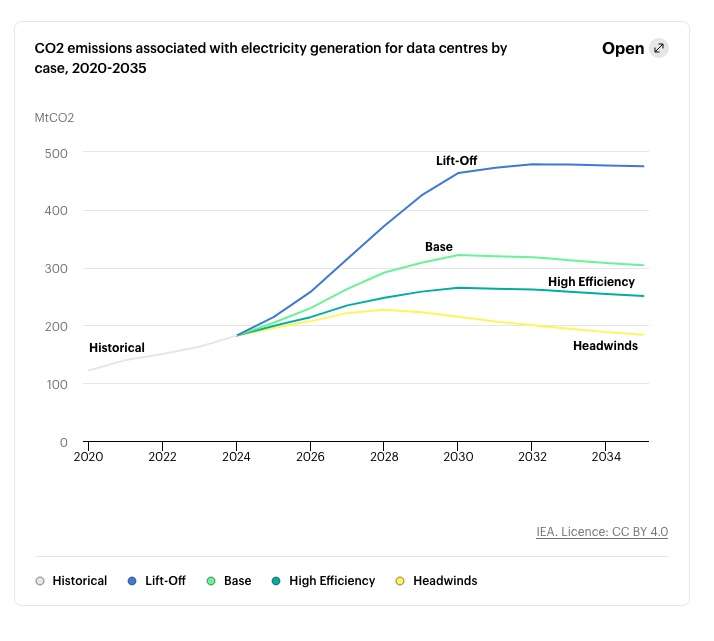

The same report by the International Energy Agency explores what AI’s impact on emissions might be. Whilst it is noted that the share of data centres in aggregate emissions may be small, data centres are among the few sectors – along with road transport and aviation – that see an increase in their direct and indirect emissions to 2030 as shown in the chart below.

Source: International Energy Agency (CC BY 4.0)

Source: International Energy Agency (CC BY 4.0)

Whilst this chart explores four potential scenarios which all involve emissions going up at different rates, or slightly dropping, the IEA report also narrates but does not depict a ‘Widespread Adoption Case’ which involves breakthrough discoveries over the next decade which could lead to potential emissions reductions three times larger than the total data centre emissions in the Lift-Off Case.

Even with this latest report from the International Energy Agency, the impact of AI on emissions is difficult to see; you might even say ‘invisible’. With reports that coal and gas producers in Australia are finding it difficult to tame their emissions, how can we trust that AI will not present similar or even more difficult challenges?

There are also other more immediate concerns with AI, some of which may never be addressed as the horse has already bolted, just like the colt from Old Regret in Banjo Patterson’s The Man from Snowy River. One courageous and experienced British investigative journalist and features writer, Carol Cadwalladr, has described it as nothing less than ‘a digital coup’.

AI has been trained using mountains of work: hours of labour and creative endeavour by anyone who has written anything on the internet as well as that of photographers, musicians, film makers and other creatives. This includes all of the works of Western Australia’s very own Tim Winton, along with thousands of other Australian authors like Helen Garner, Richard Flanagan and Charlotte Wood. This was something of great concern to writer Emily Paull when I met with her recently to talk about the publication of her first novel The Distance Between Dreams

Even worse, First Nations cultures are also experiencing theft from AI, with their culture, knowledge and stories being fed into AI without consent and with no guardrails put in place. And we are only just beginning to redress some of the harms First Nations people have already experienced.

Whilst legal suits have been initiated, it is unlikely there will ever be restorative justice for all of these wrongs. Yet the rapid pace and adoption of AI is accelerating.

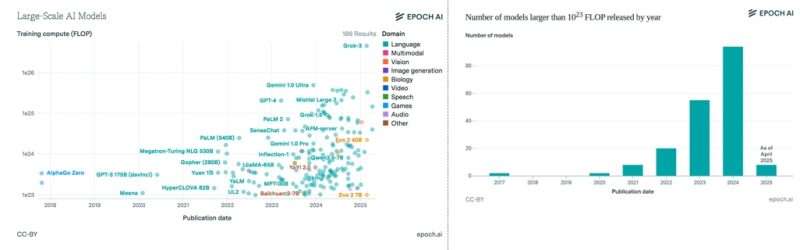

Here are some charts which show the pace – they are based on models created by Epoch AI, a US based non-profit and multidisciplinary research institute investigating the trajectory of AI and available from epoch.ai:

As a parent of high-school aged children, I’m very much aware of how technology and social media in particular have permeated our lives at such a pace that we are yet to ensure it is safe. In the early days of social media, like AI now, it appeared it would bring many benefits: how wonderful it was to more easily connect with loved ones and friends at home and afar, especially to share photos and videos. Also, social media promised to be a force for free speech and connection.

Today, these platforms harvest our data, promote misinformation, facilitate cyber-crime and for many humans, have had a negative impact on mental health. This all accelerated during the COVID pandemic, and brought with them a loneliness epidemic together with an infodemic (see Carol Cadwalladr’s TED talk on this). On a bigger scale, social media is a substantial sized piece in the kaleidoscope of our current world where surveillance by tech monopolies motivated by profit is a given, trust has been eroded, society feels more divided, and even the rule of law is being challenged.

With the benefit of hindsight, are the products worth the harms? Is the convenience of connection worth the loss of privacy; the loss of control over your information? Would more pro-active governance around social media use before widespread uptake have prevented many of the harms? Alas, we cannot go back in time.

We need to ask the same questions about AI. There is so much uncertainty around AI it is imperative preventative action is taken posthaste. We need to tackle the ‘serious matters’ around the risks of AI, including AI’s impact on emissions and climate change. Who will be best to steer our ship through this next technological revolution?

Credit: IvelinRadkov, iStock

As the little prince asked, what is essential? This is also what we must ask ourselves next time we use AI. A single person making fewer queries may seem small, but when multiplied by millions of users, the energy savings can be significant. And in doing so, we help ensure AI is nothing more than Assistive Intelligence. This is how Audrey Tang, Taiwan’s first Minister of Digital Affairs and current Ambassador-at-Large prefers to describe AI – using technologies to enhance human decision-making rather than creating machines smarter than humans. This is what is essential for a just and safe future for our world.

If you wish to understand more about AI and how it can be made safer, have a look https://www.australiansforaisafety.com.au/, here, here and here. Also, our Editor’s story from last year on how the EU is showing Australia the way on regulating AI.

* By Madeleine Cox. Madeleine Cox was raised on a farm on Binjareb Noongar country and now, together with her New Zealand/Aotearoa husband, lives with her children in Fremantle/Walyalup. She loves exploring places and ideas, and connecting with people and nature. This has prompted Madeleine to start writing independently, after many years work as a corporate and government lawyer, and service on not-for-profit boards in the health and education sectors. For more articles on Fremantle Shipping News by Madeleine, look here.

~ If you’d like to receive a copy of the sources Madeleine Cox relied on in researching and writing this article or you would like to COMMENT on this or any of our stories, don’t hesitate to email our Editor.

~ WHILE YOU’RE HERE –

PLEASE HELP US TO GROW FREMANTLE SHIPPING NEWS

FSN is a reader-supported, volunteer-assisted online magazine all about Fremantle. Thanks for helping to keep FSN keeping on!

~ Don’t forget to SUBSCRIBE to receive your free copy of The Weekly Edition of the Shipping News each Friday!